Home

Richard Gott, Sandra Duggan, Ros Roberts and Ahmed Hussain

Introduction

Our research is based on the belief that there is a body of knowledge which underlies an understanding of scientific evidence. Certain ideas which underpin the collection, analysis and interpretation of data have to be understood before we can handle scientific evidence effectively. We have called these ideas concepts of evidence. Some pupils/students will pick up these ideas in the course of studying the more traditional areas of science, but many will not. These students will not understand how to evaluate scientific evidence unless the underlying concepts of evidence are specifically taught. If these ideas are to be taught, then they need to be carefully defined.

We are in the process of developing a comprehensive, but as yet tentative, definition of concepts of evidence ranging from the ideas associated with a single measurement to those which are associated with evaluating evidence as a whole. What follows is the latest version which has been, and continues to be, informed by research and writing in primary and secondary science education, in science-based industry and in the public understanding of science. Our definition is by no means complete and we welcome comments or suggestions from readers of this site.

The reader should note that we are not suggesting that students need to understand all of these concepts. Although we believe that some of these ideas are fundamental and appropriate at any age, others may be necessary only for a student engaged in a particular branch of science.

We are aware that some concepts, such as sensitivity, can have several meanings in different areas of science. We aim to point this out where applicable.

Further information:

- The latest downloadable version of the complete list can be obtained here.

- A concept map for ‘the thinking behind the doing’ in scientific practice can be found below, and as a PDF file here.

- Investigations annotated to illustrate the application of ‘the thinking behind the doing’ approach can be found here.

- A version produced in collaboration with teachers (funded by AQA) which describes the sub-set of the complete list appropriate to GCSE science in the UK can be found here.

- A report detailing a recent research project and links to the instruments used can be found here.

- Research publications can be found here.

- This work has been influential in framing curriculum developments in England and has been cited in the US’s Framework for K-12 Science Education and the PISA 2015 Science Framework.

Background

Fundamental ideas

Investigations must be approached with a critical eye. What sort of link is to be established, with what level of measurement and how will opinion and data be weighed as evidence? This pervades the entire scheme and sets the context in which all that follows needs to be judged.

| Topic | Understanding that: | Notes |

| Opinion and data | It is necessary to distinguish between opinion based on scientific evidence and ideas on the one hand, and opinion based on non-scientific ideas (prejudice, whim, hear say . . ) on the other. | In the UK, the Royal Society’s motto ‘Nullius in verba’ which roughly translates as ‘take nobody’s word for it’ emphasises the central importance in science of evidence over assertion, as scientists make claims following investigations into the real world. All scientific research is judged on the quality of its evidence. |

| Links | A scientific investigation seeks to establish links (and the form of those links) between two or more variables. | |

| Association and causation | Links can be casual (change in the value of one variable causes a change in another), or associative (changes in one variable and changes in another are linked to some third, and possibly unrecognised, third (or more) variable). | |

| Types of measurement | Interval data (measurements of a continuous variable) are more powerful than ordinal data (rank ordering) which are more powerful than categoric data (a label). | |

| Extended tasks | Some measurements, for instance, can be very complicated and constitute a task on their own, but they are only meaningful when set within the wider investigation(s) of which they will form a part. |

Observation

Observation of objects and events can lead to informed description and the generation of questions to investigate further. Observation is one of the key links between the ‘real world’ and the abstract ideas of science. Observation, in our definition, does not include ‘measurement’ but rather deals with the way we see objects and events through the prism of our understanding of the underlying substantive conceptual structures of science.

| Topic | Understanding that: | Notes |

| Observing objects | Objects can be ‘seen’ differently depending on the conceptual window used to view them. | A low profile car tyre can be seen as nothing more than that, or it can be seen as a way of increasing the stiffness of the tyre, thus giving more centripetal force with less deformation and thus improving road holding. |

| Observing events | Events can similarly be seen through different conceptual windows. | The motion of a parachute is seen differently when looked at through a framework of equal and unequal forces and their corresponding accelerations. |

| Using a key | The way in which an object can be ‘seen’ can be shaped by using a key. | E.g. a branching key gives detailed clues as to what to ‘see’. It is, then, a heavily guided substantive concept-driven observation. |

| Taxonomies | Taxonomies are a means of using conceptually driven observations to set up classes of objects or organisms that exhibit similar/different characteristics or properties with a view to using the classification to solve a problem. | Organisms observed in a habitat may be classified according to their feeding characteristics (to track population changes over time for instance) or a selection of materials classified into efficient conductors identified from inefficient conductors. |

| Observation and experiment | Observation can be the start of an investigation, experiment or survey. | Noticing that shrimp populations vary in a stream leads to a search for a hypothesis as to why that is the case, and an investigation to test that hypothesis. |

| Observation and map drawing | Technique used in biological and geological fieldwork to map a site based on conceptually driven observations that illustrate features of scientific interest. | An ecologist may construct a map of a section of a stream illustrating areas of varying stream flow rate or composition of the stream bed. |

| Note | We regard ‘observation’ as being essentially substantive in nature, requiring the use of established ideas of force, for instance, as a window on how we see the world. As such it is included here only because of its crucial role in raising questions for investigation. |

Measurement

Measurement must take into account inherent variation due to uncontrolled variables and the characteristics of the instruments used. This section lies at the very centre of our model for measurement, data and evidence and is fundamental to it.

| Topic | Understanding that: | Notes |

| Inherent variation | The measured value of any variable will never repeat unless all possible variables are controlled between measurements – circumstances which are very difficult to create. | Such uncertainties are inherent in the measurement process that lies at the heart of science; they do not represent a failure of science, or of scientists. |

| Human error | Needless to say, the measured value of any variable can be subject to human error which can be random, or systematic. |

A framework for data and evidence

In any discussion of the place of data and evidence in science or engineering, we must avoid the trap of failing to define terms and, as a consequence, rendering the argument unintelligible. We shall therefore begin by defining what we mean by data and evidence.

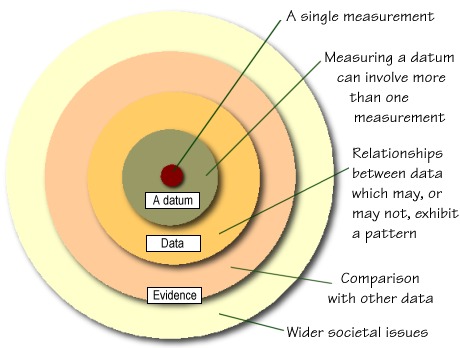

We take datum to mean the measurement of a parameter e.g. the volume of gas or the type of rubber. This does not necessarily mean a single measurement: it may be the result of averaging several repeated measurements and these could be quantitative or qualitative.

Data we take to be no more or less than the plural of datum, to state the obvious.

Evidence, on the other hand, we take as data which have been subjected to some form of validation so that it is possible, for instance, to assign a ‘weight’ to the data when coming to an overall judgement. This process of weighting will need to look wider than the data itself. It will need to consider, for example, the quality of the experiment and the conditions under which it was undertaken, together with its reproducibility by other workers in other circumstances and perhaps the practicality of implementing the outcomes of the evidence.

We begin our definition in the centre of the figure above with the ideas that underpin the making of a single measurement and work outwards. This seems a logical way to proceed but, please note, that we are not suggesting that this equates with the order of understanding necessary for carrying out an experiment or the order in which these ideas are best taught.

Making a single measurement

To make a single measurement, the choice of an instrument must be suited to the value to be measured. Making an appropriate choice is informed by an understanding of the basic principles underlying measuring instruments.

1 Underlying relationships

All instruments rely on an underlying relationship which converts the variable being measured into another that is easily read. For instance, the following (volume, temperature and force) are measured by instruments which convert each variable into length:

- a measuring cylinder converts volume to a length of the column of liquid

- a thermometer converts temperature to a change in volume and then to a change in length of the mercury thread

- a force meter converts a force into the changing length of a spring

Other instruments convert the variable to an angle on a curved scale, such as a car speedometer. Electronic instruments convert the variable to a voltage.

Some instruments are not so obviously ‘instruments’ and may not be recognised as such. One example is the use of lichen as an indicator of pollution and another is pH paper where chemical change is used as the basis of the ‘instrument’ and the measurement is a colour. Other instruments rely on more complex and less direct relationships.

| Topic | Understanding that: | Example |

| 1. Linear relationships | … most instruments rely on an underlying and preferably linear relationship between two variables. | A thermometer relies on the relationship between the volume of a liquid and temperature. |

| 2. Non-linear relationships | … some ‘instruments’, of necessity, rely on non-linear relationships. | Moving iron ammeter, pH. |

| 3. Complex relationships | … the relationship may not be straightforward and may be confounded by other factors. | The prevalence, or size, of a species of lichen is an indicator of the level of pollution but other environmental factors such as aspect, substrate, or air movement can also affect the distribution of lichen. |

| 4. Multiple relationships | … sometimes several relationships are linked together so that the measurement of a variable is indirect. | Medical diagnosis often relies on indirect, multiple relationships. Braking distance is an indirect measure of frictional force.Proxy measures are very important in ‘historical’ sciences, such as geology/earth science and in the study of climate change e.g. tree rings and ice cores as proxy measures of climate conditions. |

2 Calibration and error

Instruments must be carefully calibrated to minimise the inevitable uncertainties in the readings. All instruments must be calibrated so that the underlying relationship is accurately mapped onto the scale. If the relationship is non-linear, the scale has to be calibrated more often to map that non-linearity. All instruments, no matter how well-made, are subject to error. Each instrument has finite limits on, for example, its resolution and sensitivity.

| Topic | Understanding that: | Example |

| 5. End points | … the instrument must be calibrated at the end points of the scale. | A thermometer must be calibrated at 0 °C and 100 °C. |

| 6. Intervening points | … the instrument must be calibrated at points in between to check the linearity of the underlying relationship. | A thermometer must be calibrated at a number of intervening points to check, for instance, for non-linearity due to non-uniform bore of the capillary. |

| 7. Zero Errors | … there can be a systematic shift in scale and that instruments should be checked regularly. | If the zero has been wrongly calibrated, if the instrument itself was not zeroed before use or if there is fatigue in the mechanical components, a systematic error can occur. |

| 8. Overload, limiting sensitivity / limit of detection | … there is a maximum (full scale deflection) and a minimum quantity which can be measured reliably with a given instrument and technique. | The lower and upper ends of the scale of a measuring instrument place limits on the lowest and highest values that can be measured. It is all too easy to read an electronic meter (in particular) without realising it is on its end stop. |

| 9. Sensitivity* | … the sensitivity of an instrument is a measure of the amount of error inherent in the instrument itself. | An electronic voltmeter will give a reading which fluctuates slightly. |

| 10. Resolution and error | … the resolution is the smallest division which can be read easily. The resolution can be expressed as a percentage. | If the instrument can measure to 1 division and the reading is 10 divisions, the error can be expressed as 10±1 or as a percentage error of 10%. |

| 11. Specificity** | … an instrument must measure only what it purports to measure. | This is of particular significance in biology where indirect measurements are used as ‘instruments’ e.g. bicarbonate indicator used as an indirect measure of respiratory activity in woodlice could be affected by other acids such as that produced by the woodlice during excretion. |

| 12. Instrument use | … there is a prescribed procedure for using an instrument which, if not followed, will lead to systematic and / or random errors. | Taking a thermometer out of the liquid to read it will lead to systematically low readings. More specifically, there is a prescribed depth of immersion for some thermometers which takes account of the expansion of the glass and the mercury (or alcohol) which is not in the liquid being measured. |

| 13. Human error | … even when an instrument is chosen and used appropriately, human error can occur. | Scales on measuring instruments can easily be misread. |

*Sensitivity and **specificity have a different meaning in medicine in the measurement of disease where sensitivity is the true positive rate, that is, the proportion of patients with the disease who are correctly ‘measured’ or identified by the test. Specificity is the proportion of patients without the disease who are correctly measured or identified by the test. These two measures describe the ‘measurement efficiency’.

3 Reliability and validity of a single measurement

Any measurement must be reliable and valid. A measurement, once made, must be scrutinised to make sure that it is a valid measurement; it is measuring what was intended, and that it can be relied upon. Repeating readings and triangulation, by using more than one of the same type of instrument or by using another type of instrument, can increase reliability.

| Topic | Understanding that: | Example |

| 14. Reliability | … a reliable measurement requires an average of a number of repeated readings; the number needed depends on the accuracy required in the particular circumstances. | Measurement of blood alcohol level can be assessed with a breathalyser, but at least 3 independent readings are made before the measure is considered a legal measurement. |

| 15. Reliability | … instruments can be subject to inherent inaccuracy so that using different instruments can increase reliability. | Measurement of blood alcohol level can be assessed with a breathalyser and cross checked with a blood test. Temperature can be measured with a mercury, alcohol and digital thermometer to ensure reliability. |

| 16. Reliability | … human error in the use of an instrument can be overcome by independent, random checks. | Spot checks of measurement techniques by co-workers are sometimes built into routine procedures. |

| 17. Validity | … measures that rely on complex or multiple relationships must ensure that they are measuring what they purport to measure. | A complex technique for measuring a vitamin may be measuring more than one form of the same vitamin. |

Measuring a datum

Moving from the measuring instrument itself, we now turn to the actual measurement of a datum. The measurement of a single datum may be required or it may be as one of several data to be measured. A significant element of science in industry is indeed about the sophisticated and careful measurement of a single parameter.

1 The choice of an instrument for measuring a datum

Measurements are never entirely accurate for a variety of reasons. Of prime importance is choosing the instrument to give the accuracy and precision required; a proactive choice rather than a reactive discovery that it wasn’t the right instrument for the job!

| Topic | Understanding that: | Example |

| 18. Trueness or accuracy* | … trueness is a measure of the extent to which repeated readings of the same quantity give a mean that is the same as the ‘true’ mean. | If the mean of a series of readings of the height of an individual pupil is 173 cm and her ‘true’ height, as measured by a clinic’s instrument is 173 cm, the measuring instrument is ‘true’. |

| 19. Non-repeatability | … repeated readings of the same quantity with the same instrument never give exactly the same answer. | Weighing yourself on a set of bathroom scales in different places on the bathroom floor, or standing on a slightly different position on the scales, will result in slightly differing readings. It is never possible to repeat the reading in exactly the same way. |

| 20. Precision | … precision (sometimes called “imprecision” in industry) refers to the observed variations in repeated measurements from the same instrument. In other words, precision is an indication of the spread of the repeated measurements around the mean. A precise measurement is one in which the readings cluster closely together. The less the instrument’s precision, the greater is its uncertainty. A precise measurement may not necessarily be an accurate or true measurement (and vice versa). The concept of precision is also called “reliability” in some fields. A more formal descriptor or assessment of precision might be the range of the observed readings, the standard deviation of those readings, or the standard error of the instrument itself. | For bathroom scales, a precise set of measurements might be: 175, 176, 175, 176, and 174 pounds. |

| 21. Reproducibility | … whereas repeatability (precision) relates to the ability of the method to give the same result for repeated tests of the same sample on the same equipment (in the same laboratory), reproducibility relates to the ability of the method to give the same result for repeated tests of the same sample on equipment in different laboratories. | ‘Round Robins’ are often used to check between different laboratories. A standardised sample is sent to each lab and they report their measurement(s) and degree of uncertainty. Labs are then compared. |

| 22. Outliers in relationships | … outliers, aberrant or anomalous values in data sets should be examined to discover possible causes. If an aberrant measurement or datum can be explained by poor measurement procedures (whatever the source of error), then it can be deleted. | Outliers may be due to errors discussed above, for example. In medical laboratory practice, outliers may have serious implications if not explored. |

* Accuracy is a term which is often used rather loosely to indicate the combined effects of precision and trueness. But, in some science-based industries the distinction we have defined here is used widely so that, for example, the precision and accuracy of a given measurement are quoted routinely.

2 Sampling a datum

A series of measurements of the same datum can be used to determine the reliability of the measurement. We shall use the term sampling to mean any sub-set of a ‘population’. The ‘population’ might be the population of a species of animal or plant or even the ‘population’ of possible sites where gold might be found. We shall also take the population to mean the infinite number of repeated readings that could be taken of any particular measurement. We consider these together since their effect on the data is the same.

| Topic | Understanding that: | Example |

| 23. Sampling | … one or measurements comprise a sample of all the measurements that could be made. | The measurement of a single blade of grass is a sample of all the blades of grass in a field.A single measurement of the bounce height of a ball is a sample of the infinite number of such bounces that could be measured. |

| 24. Size of sample | … the number of measurements taken. The greater the number of readings taken, the more likely they are to be representative of the population. | As more readings of, for example, the height of students in a college are taken, the more closely the sample is likely to represent the whole college population.The more times a single ball is bounced, the more the sample is likely to represent all possible bounces of that ball. |

| 25. Reducing bias in sample / representative sampling | … measurements must be taken using an appropriate sampling strategy, such as random sampling, stratified or systematic sampling so that the sample is as representative as possible. | In the above example of the height of college students, tables of random numbers can be used to select students. |

| 26. An anomolous datum | … an unexpected datum could be indicative of inherent variation in the data or the consequence of a recognised uncontrolled variable. | In the above example, a very small height may have been recorded from a child visiting the college and should not be part of the population being sampled; whereas a very low rebound height from a squash ball may occur as a result of differences in the material of the ball and is therefore part of the sample. |

3 Statistical treatment of measurements of a single datum

A group of measurements of the same datum can be described in various mathematical ways. The statistical treatment of a datum is concerned with the probability that a measurement is within certain limits of the true reading. The following are some of the basic statistics associated with a single datum:

| Topic | Understanding that: | Example |

| 27. Range | … the range is a simple description of the distribution and defines the maximum and minimum values measured. | Measuring the height of carbon dioxide bubbles on successive trials in a yeast experiment, the following measurements were recorded and ordered sequentially: 2.7, 2.9, 3.1, 3.1, 3.1, 3.3, 3.4, 3.4, 3.5, 3.6 and 3.7 cm. The range is 1.0 cm (3.7 – 2.7). |

| 28. Mode | … the mode is the value which occurs most often. | Continuing the example above, the mode is 3.1 cm. |

| 29. Median | … the median is the value below and above which there are half the measurements. | Continuing the example above, the median is 3.3 cm. |

| 30. Mean | … the mean (average) is the sum of all the measurements divided by the number of measurements. | Continuing the example above, the mean is 3.2 cm. |

| 31. Frequency distributions. | … a series of readings of the same datum can be represented as a frequency distribution by grouping repeated measurements which fall within a given range and plotting the frequencies of the grouped measurements. | |

| 32. Standard deviation. | … the standard deviation (SD) is a way of describing the spread of normally distributed data. The standard deviation indicates how closely the measurements cluster around their mean. In other words, the standard deviation is a measure of the extent to which measurements deviate from their mean. The more closely the measurements cluster around the mean, the smaller the standard deviation. The standard deviation depends on the measuring instrument and technique – the more precise these are, the smaller the standard deviation of the sample or of repeated measurements. | Continuing the example above, SD = 0.30 cm. |

| 33. Standard deviation of the mean (standard error). | … the standard deviation of the mean describes the frequency distribution of the means from a series of readings repeated many times. The standard deviation of the mean depends on the measuring instrument and technique AND on the number of repeats. The standard error of a measurement is an estimate of the probable range within which the ‘true’ mean falls; that is, an estimate of the uncertainty associated with the datum. | Continuing the example above, SE = 0.09 cm. |

| 34. Coefficient of variation. | … the coefficient of variation is the standard deviation expressed as a percentage of the mean (CV = SD*100/mean). | Continuing the example above, CV = 9.4%. |

| 35. Confidence limits. | … confidence limits indicate the degree of confidence that can be placed on the datum. For example, ‘95% confidence limits’ means that the ‘true’ datum lies within 2 standard errors of the calculated mean, 95% of the time. Similarly ‘68% confidence limits’ means that the ‘true’ datum lies within 2 standard errors of the calculated mean, 68% of the time. | Continuing the example above, the true value of the datum lies within 0.18 cm (2 standard errors) of 3.2 cm (the mean), 19 times out of 20. The upper and lower confidence limits at the 95% level are 3.38 (3.2 + 0.18) and 3.02 (3.2 – 0.18) respectively. In other words, the ‘true’ value lies between 3.02 and 3.38 cm, 95% of the time. |

4 Reliability and validity of a datum

A datum must have a known (or estimated) reliability and validity before it can be used in evidence.

Any datum must be subject to careful scrutiny to ascertain the extent to which it:

- is valid: that is, has the value of the appropriate variable been measured? Has the parameter been sampled so that the datum represents the population?

- is reliable: for example, does the datum have sufficient precision? The wider the confidence limits (the greater the uncertainty), the less reliable the datum.

Only then can the datum be weighed as evidence. Evaluation of a datum also includes evaluating the validity of the ideas associated with the making of a single measurement.

| Topic | Understanding that: | Example |

| 36. Reliability | … a datum can only be weighed as evidence once the uncertainty associated with the instrument and the measurement procedures have been ascertained. | The reliability of a measurement of blood alcohol level should be assessed in terms of the uncertainty associated with the breathalyser (e.g. +/- 0.01) and in terms of how the measurement was taken (e.g. superficial breathing versus deep breathing). |

| 37. Validity | … that a measurement must be of, or allow a calculation of, the appropriate datum. | The girth of a tree is not a valid indicator of the tree’s age. |

Data in investigations – looking for relationships

An investigation is an attempt to determine the relationship, or lack of one, between the independent and dependent variables or between two or more sets of data. Investigations take many forms but all have the same underlying structure.

1. The design of practical investigations

What do we need to understand to be able to appraise the design of an investigation in terms of validity and reliability?

1.1 Variable structure

Identifying and understanding the basic structure of an investigation in terms of variables and their types helps to evaluate the validity of data.

| Topic | Understanding that: | Example |

| 38. The independent variable | … the independent variable is the variable for which values are changed or selected by the investigator. | The type of ball in an investigation to compare the bounciness of different types of balls; the depth in a pond at which light intensity is to be measured. |

| 39. The dependent variable | … the dependent variable is the variable the value of which is measured for each and every change in the independent variable. | In the same investigations as above: the height to which each type of ball bounces; the light intensity at each of the chosen depths in the pond. |

| 40. Correlated variables | … in some circumstances we are looking for a correlation only, rather than any implied causation | Foot size can be predicted from hand size (both ‘caused’ by other factors). |

| 41. Categoric variables | … a categoric variable has values which are described by labels. Categoric variables are also known as nominal data. | The variable ‘type of metal’ has values ‘iron’, ‘copper’ etc. |

| 42. Ordered variables | … an ordered variable has values which are also descriptions, labels or categories but these categories can be ordered or ranked. Measurement of ordered variables results in ordinal data. | The variable of size e.g.’ very small’, ‘small’, ‘medium’ or ‘large’ is an ordered variable. Although the labels can be assigned numbers (e.g. very small=1, small=2 etc.) size remains an ordered variable. |

| 43. Continuous variables | … a continuous variable is one which can have any numerical value and its measurement results in interval data. | Weight, length, force. |

| 44. Discrete variables | … a discrete variable is a special case in which the values of the variable are restricted to integer multiples. | The number of discrete layers of roof insulation. |

| 45. Multivariate designs | … a multi-variate investigation is one in which there is more than one independent variable. | …The effect of the width and the length of a model bridge on its strength. The effect of temperature and humidity on the distribution of gazelles in a particular habitat. |

1.2 Validity, ‘fair tests’ and controls

Uncontrolled variation can be reduced through a variety of techniques. ‘Fair tests’ and controls aim to isolate the effect of the independent variable on the dependent variable. Laboratory-based investigations, at one end of the spectrum, involve the investigator changing the independent variable and keeping all the control variables constant. This is often termed ‘the fair test’, but is no more than one of a range of valid structures. At the other end of the spectrum are ‘field studies’ where many naturally changing variables are measured and correlations sought. For example, an ecologist might measure many variables in a habitat over a period of time. Having collected the data, correlations might be sought between variables such as day length and emergence of a butterfly, using statistical treatments to ensure validity. The possible effect of other variables can be reduced by only considering data where the values of other variables are the same or similar. In between these extremes, are many types of valid design which involve different degrees of manipulation and control. Fundamentally, all these investigations have a similar structure; what differs are the strategies to ensure validity.

| Topic | Understanding that: | Example |

| 46. Fair test | … a fair test is one in which only the independent variable has been allowed to affect the dependent variable. | A laboratory experiment about the effect of temperature on dissolving time, where only the temperature is changed. Everything else is kept exactly the same. |

| 47. Control variables in the laboratory | … other variables can affect the results of an investigation unless their effects are controlled by keeping them constant. | In the above experiment, the mass of the chemical, the volume of liquid, the stirring technique and the room temperature are some of the variables that should be controlled. |

| 48. Control variables in field studies | … some variables cannot be kept constant and all that can be done is to make sure that they change in the same way. | In a field study on the effect of different fertilisers on germination, the weather conditions are not held constant but each experimental plot is subjected to the same weather conditions. The conditions are matched. |

| 49. Control variables in surveys | … the potential effect on validity of uncontrolled variables can be reduced by selecting data from conditions that are similar with respect to other variables. | In a field study to determine whether light intensity affects the colour of dog’s mercury leaves, other variables are recorded, such as soil nutrients, pH and water content. Correlations are then sought by selecting plants growing where the value of these variables is similar. |

| 50. Control group experiments | … control groups are used to ensure that any effects observed are due to the independent variable(s) and not some other unidentified variable. They are no more than the default value of the independent variable. | In a drug trial, patients with the same illness are divided into an experimental group who are given the drug and a control group who are given a placebo or no drug. |

1.3 Choosing values

The values of the variables need to be chosen carefully. This is possible in the majority of investigations during trialling. In field studies, where data are collected from variables that change naturally, some of these concepts can only be applied retrospectively.

| Topic | Understanding that: | Example |

| 51. Trial run | … a trial run can be used to establish the broad parameters required of the experiment (scale, range, number) and help in choosing instrumentation and other equipment. | Before drug experiments are carried out, trials are conducted to determine appropriate dosage and appropriate measures of side effects, among other things. |

| 52. The sample | … issues of sample size and representativeness apply in the same way as in sampling a datum (see Measuring a datum). | The choice of sample size and the sampling strategy will affect the validity of the findings. |

| 53. Relative scale | … the choice of sensible values for quantities is necessary if measurements of the dependent variable are to be meaningful. | In differentiating the dissolving times of different chemicals, a large quantity of chemical in a small quantity of water causing saturation will invalidate the results. |

| 54. Range | … the range over which the values of the independent variable is chosen is important in ensuring that any pattern is detected. | An investigation into the effect of temperature on the volume of yeast dough using a range of 20 – 25°C would show little change in volume. |

| 55. Interval | … the choice of interval between values determines whether or not the pattern in the data can be identified. | An investigation into the effect of temperature on enzyme activity would not show the complete pattern if 20°C intervals were chosen. |

| 56. Number | … a sufficient number of readings is necessary to determine the pattern. | The number is determined partly by the range and interval issues above but, in some cases, for the complete pattern to be seen, more readings may be necessary in one part of the range than another. This applies particularly if the pattern changes near extreme values, for example, in a spring extension experiment at the top of the range of the mass suspended on the spring. |

1.4 Accuracy and precision

The design of the investigation must provide data with sufficiently appropriate accuracy and precision to answer the question. This consideration should be built into the design of the investigation. Different investigations will require different levels of accuracy and precision depending on their purpose.

| Topic | Understanding that: | Example |

| 57. Determining differences | … there is a level of precision which is sufficient to provide data which will allow discrimination between two or more means. | The degree of precision required to discriminate between the bounciness of a squash ball and a ping pong ball is far less than that required to discriminate between two ping pong balls. |

| 58. Determining patterns | … there is a level of precision which is required for the trend in a pattern to be determined. | Large error of measurement bars on a line graph or dispersed scatter plot points may not allow discrimination between an upward curve or a straight line. |

1.5 Tables

Tables can be used to design an experiment in advance of the data collection and, as such, contribute towards its validity. In this way, tables can be much more than just a way of presenting data, after the data have been collected.

| Topic | Understanding that: | Example |

| 59. Tables | … tables can be used as organisers for the design of an experiment by preparing the table in advance of the whole experiment. A table has a conventional format. | An experiment on the effect of temperature on the dissolving time of sodium chloride: |

1.6 Reliability and validity of the design

In evaluating the design of an investigation, there are two overarching questions:

- Will the measurements result in sufficiently reliable data to answer the question?

- Will the design result in sufficiently valid data to answer the question?

Evaluating the design of an investigation includes evaluating the reliability and validity of the ideas associated with the making of single measurements and with each and every datum.

| Topic | Understanding that: | Example |

| 60. Reliability of the design | … the reliability of the design includes a consideration of all the ideas associated with the measurement of each and every datum. | Factors associated with the choice of the measuring instruments to be used must be considered e.g. the error associated with each measuring instrument.The sampling of each datum and the accuracy and precision of the measurements should also be considered.This includes the sample size, the sampling technique, relative scale, the range and interval of the measurements, the number of readings, and the appropriate accuracy and precision of the measurements. |

| 61. Validity of the design | … the validity of the design includes a consideration of the reliability (as above) and the validity of each and every datum. | This includes the choice of measuring instrument in relation to whether the instrument is actually measuring what it is supposed to measure.This includes considering the ideas associated with the variable structure and the concepts associated with the fair test.For example, measuring the distance travelled by a car at different angles of a ramp will not answer a question about speed as a function of angle. |

2. Data presentation, patterns and relationships in practical investigations

Having established that the design of an investigation is reliable and valid, what do we need to understand to explore the relationship between one variable and another? Another way of thinking about this is to think of the pattern between two variables or 2 sets of data. What do we need to understand to know that the pattern is valid and reliable? The way that data are presented allows patterns to be seen.

2.1 Data presentation

There is a close link between graphical representations and the type of variable they represent.

| Topic | Understanding that: | Example |

| 62. Tables | … a table is a means of reporting and displaying data. But a table alone presents limited information about the design of an investigation e.g. control variables or measurement techniques are not always overtly described. | Simple patterns such as directly proportional or inversely proportional relationships can be shown effectively in a table. |

| 63. Bar charts | … bar charts can be used to display data in which the independent variable is categoric and the dependent variables is continuous. | The number of pupils who can and cannot roll their tongues would be best presented on a bar chart. |

| 64. Line graphs | … line graphs can be used to display data in which both the independent variable and the dependent variable are continuous. They allow interpolation and extrapolation. | The length of a spring and the mass applied would be best displayed in a line graph. |

| 65. Scatter graphs (or scatter plots) | … can also be used to display data in which both the independent variable and the dependent variable are continuous. Scatter graphs are often used where there is much fluctuation in the data because they can allow an association to be detected. Widely scattered points can show a weak correlation, points clustered around, for example, a line can indicate a relationship. | The dry mass of the aerial parts of a plant and the dry mass of the roots. |

| 66. Histograms | … histograms can be used to display data in which a continuous independent variable has been grouped into ranges and in which the dependent variable is continuous. | On a sea shore, the distance from the sea could be grouped into ranges and the number of limpets in each range plotted in a histogram. |

| 67. Box and whisker plots | … the box, in box and whisker plots, represents 50% of the data limited by the 25th and 75th percentile. The central line is the median. The limits of the ‘whiskers’ may show either the extremes of the range or the 2.5% and 97.5% values. | Box and whisker plots are often used to compare large data sets. |

| 68. Multi-variate data | … 3D bar charts and line graphs (surfaces) are suitable for some forms of multivariate data. | |

| 69. Other forms of display | … data can be transformed, for example, to logarithmic scales so that they meet the criteria for normality which allows the use of parametric statistics. | Logarithmic transformation is commonly used in clinical and laboratory medicine, weather maps etc. |

2.2 Statistical treatment of measurements of data

There are a large number of statistical techniques for analysing data which address three main questions:

- Do the two groups of data differ from each other (by probabilistic chance alone)?

- Do data change when repeated measurements are taken on a second separate occasion?

- Is there an association between two sets of data?

Statistics consider the variability of the data and present a result based on probability. Each statistical technique has associated criteria depending on, for example, the type of data, its distribution, the sample size etc. Some common methods of statistical analysis of data are shown below.

| Topic | Understanding that: |

| 70. Differences between means | … a t-test can be used to estimate the probability that two means from normally distributed populations, derived from an investigation involving a categoric independent variable, are different. i.e. what is the chance that the two means probably occurred by chance alone? If measures are repeated with the same or matched pairs, then a paired t-test can be used. |

| 71. Analysis of variance | … analysis of variance is a technique which can be used to estimate the effects of a number of variables in a multi-variate problem involving categoric independent variables. |

| 72. Linear and non-linear regression | … regression can be used to derive the ‘line of best fit’ for data resulting from an investigation involving a continuous independent variable. |

| 73. Non-parametric measures | … when the measurements are not normally distributed, non-parametric tests, such as the Mann-Whitney U-test, can be used to estimate the probability of any differences. |

| 74. Categoric data | … when the data results from an investigation in which both independent and dependent variables are categoric, the analysis of the data must use, for instance, a chi-squared test. |

2.3 Patterns and relationships in data

Data must be inspected for underlying patterns. Patterns represent the behaviour of variables so that they cannot be treated in isolation from the physical system that they represent. Patterns can be seen in tables or graphs or can be reported by using the results of appropriate statistical analysis. The interpretation of patterns and relationships must respect the limitations of the data: for instance, there is a danger of over-generalisation or of implying causality when there may be a different, less direct type of association.

| Topic | Understanding that: | Example |

| 75. Types of patterns | … there are different types of association such as causal, consequential, indirect or chance associations. “Chance association” means that observed differences in data sets, or changes in data over time, happen simply by chance alone. We must sceptically be open to possibility that a pattern has emerged by chance alone. Statistical tests give us a rational way to estimate this chance. | In any large multivariate set of data, there will be associations, some of which will be chance associations. Even if x and y are highly correlated, x does not necessarily cause y: y may cause x or z may cause x and y. Also, changes in students’ understanding before and after an intervention may not be significant and/or may be due to other factors. |

| 76. Linear relationships | … straight line relationships (positive slopes, negative, and vertical and horizontal as special cases) can be present in data in tables and line graphs and that such relationships have important predictive power (y = mx + c). | Height and time for a falling object. |

| 77. Proportional relationships | … direct proportionality is a particular case of a straight line relationships with consequent predictive characteristics. The relationship is often expressed in the form (y = mx). | Hooke’s law: the length of a spring is directly proportional to the force on the spring. |

| 78. ‘Predictable’ curves | … patterns can follow predictable curves (y=x2 for instance), and that such patterns are likely to represent significant regularities in the behaviour of the system. | Velocity against time for a falling object. Also, the terminal velocity of a parachute against its surface area. |

| 79. Complex curves | … some patterns can be modelled mathematically to give approximations to different parts of the curve | Hooke’s law for a spring taken beyond its elastic limit. |

| 80. Empirical relationships | … patterns can be purely empirical and not be easily represented by any simple mathematical relationship. | Traffic flow as a function of time of day. |

| 81. Anomalous data | … patterns in tables or graphs can show up anomalous data points which require further consideration before excluding them from further consideration. | A ‘bad’ measurement or datum due to human error. |

| 82. Line of best fit | … for line graphs (and scatter graphs in some cases) a ‘line of best fit’ can be used to illustrate the underlying relationship, ‘smoothing out’ some of the inherent (uncontrolled) variation and human error. |

3. Reliability and validity of the data in the whole investigation

In evaluating the whole investigation, all the foregoing ideas about evidence need to be considered in relation to the two overarching questions:

- Are the data reliable?

- Are the data valid?

In addressing these two questions, ideas associated with the making of single measurements and with each and every datum in an investigation should be considered. The evaluation should also include a consideration of the design of an investigation, ideas associated with measurement, with the presentation of the data and with the interpretation of patterns and relationships.

Data to evidence – comparisons with other data

So far we have considered the data in a single investigation. In reality, the results of an investigation will usually be compared with other data.

| Topic | Understanding that: |

| 83. A series of experiments | … a series of experiments can add to the reliability and validity of evidence even if, individually, their precision does not allow much weight to be placed on the results of any one experiment alone. |

| 84. Secondary Data | … data collected by others is a valuable source of additional evidence, provided its value as evidence can be judged. E.g. meta-analyses. |

| 85. Triangulation | … triangulation with other methods can strengthen the validity of the evidence. |

Relevant societal issues

Evidence must be considered in the light of personal and social experience and the status of the investigators. If we are faced with evidence and we want to arrive at a judgement, then other factors will also come into the equation, some of which are listed below.

| Topic | Understanding that: | Example |

| 86. Credibility of evidence | … credibility has a lot to do with face validity: consistency of the evidence with conventional ideas, with common sense, and with personal experience. Credibility increases with the degree of scientific consensus on the evidence or on theories that support the evidence. Credibility can also turn on the type of evidence presented, for instance, statistical versus anecdotal evidence. | Evidence showing low emissions of dioxins from a smokestack is compromised by photos of black smoke spewing from the smokestack (even though dioxins are relatively colourless). Also, concern for potential health hazards for workers in some industries often begins with anecdotal evidence, but is initially rejected as not being scientifically credible. |

| 87. Practicality of Consequences | … the implications of the evidence may be practical and cost effective, or they may not be. The more impractical or costly the implications, the greater the demand for higher standards of validity and reliability of the evidence. | The negative side effects of a drug may outweigh its benefits, for all but terminally ill patients. Also, when judging the evidence on the source of acid rain, Americans will likely demand a greater degree of certainty of the evidence than Canadians who live down wind, because of the cost to American industries to reduce sulphur. |

| 88. Experimenter bias | … evidence must be scrutinized for inherent bias of the experimenters. Possible bias may be due to funding sources, intellectual rigidity, or an allegiance to an ideology such as scientism, religious fundamentalism, socialism, or capitalism, to name but a few. Bias is also directly related to interest: Who benefits? Who is burdened? | Studying the link between cancer and smoking funded by the tobacco industry; or studying the health effects of genetically modified foods funded by Green Peace. Also, the acid rain issue (above) illustrates different interests on each side of the Canadian/American border. |

| 89. Power structures | … evidence can be accorded undue weight, or dismissed too lightly, simply by virtue of its political significance or due to influential bodies. Trust can often be a factor here. Sometimes people are influenced by past occurrences of broken trust by government agencies, by industry spokespersons, or by special interest groups. | Studies published in the New England Journal of Medicine tend to receive greater weight than other studies. Also, the pharmaceutical industry’s negative reaction to Dr. Olivieri’s research results that were not supportive of their drug Apotex at Toronto’s Hospital for Sick Children in 2001. |

| 90. Paradigms of practice | … different investigators may work within different paradigms of research. For instance, engineers operate from a different perspective than scientists. Thus, evidence garnered within one paradigm may take on quite a different status when viewed from another paradigm of practice. | Theoretical scientists tend to use evidence to support arguments for advancing a theory or model, whereas scientists working for an NGO, for instance, tend to use evidence to solve a problem at hand within a short time period. Theoretical scientists have the luxury of subscribing to higher standards of validity and reliability for their evidence. |

| 91. Acceptability of consequences | … evidence can be denied or dismissed for what may appear to be illogical reasons such as public and political fear of its consequences. Prejudice and preconceptions play a part here. | During the tainted blood controversies in the mid 1980s, the Canadian Red Cross had difficulty accepting evidence concerning the transmission of HIV in blood transfusions. BSE and traffic pollution are examples in Europe. |

| 92. Status of experimenters | … the academic or professional status, experience and authority of the experimenters may influence the weight which is placed on the evidence. | Nobel laureates may have their evidence accepted more easily than new researchers’ evidence. Also, a botanist’s established reputation affects the credibility of his or her testimony concerning legal evidence in a courtroom. |

| 93. Validity of conclusions | … conclusions must be limited to the data available and not go beyond them through inappropriate generalisation, interpolation or extrapolation | The beneficial effects of a pharmaceutical may be limited to the population sample used in the human trials of the new drug. Also, evidence acquired from a male population concerning a particular cardiac problem may not apply as widely to a female population. |

We are indebted to Glen Aikenhead of the University of Saskatchewan for his detailed comments on this version and for some of the examples used to illustrate the ideas.

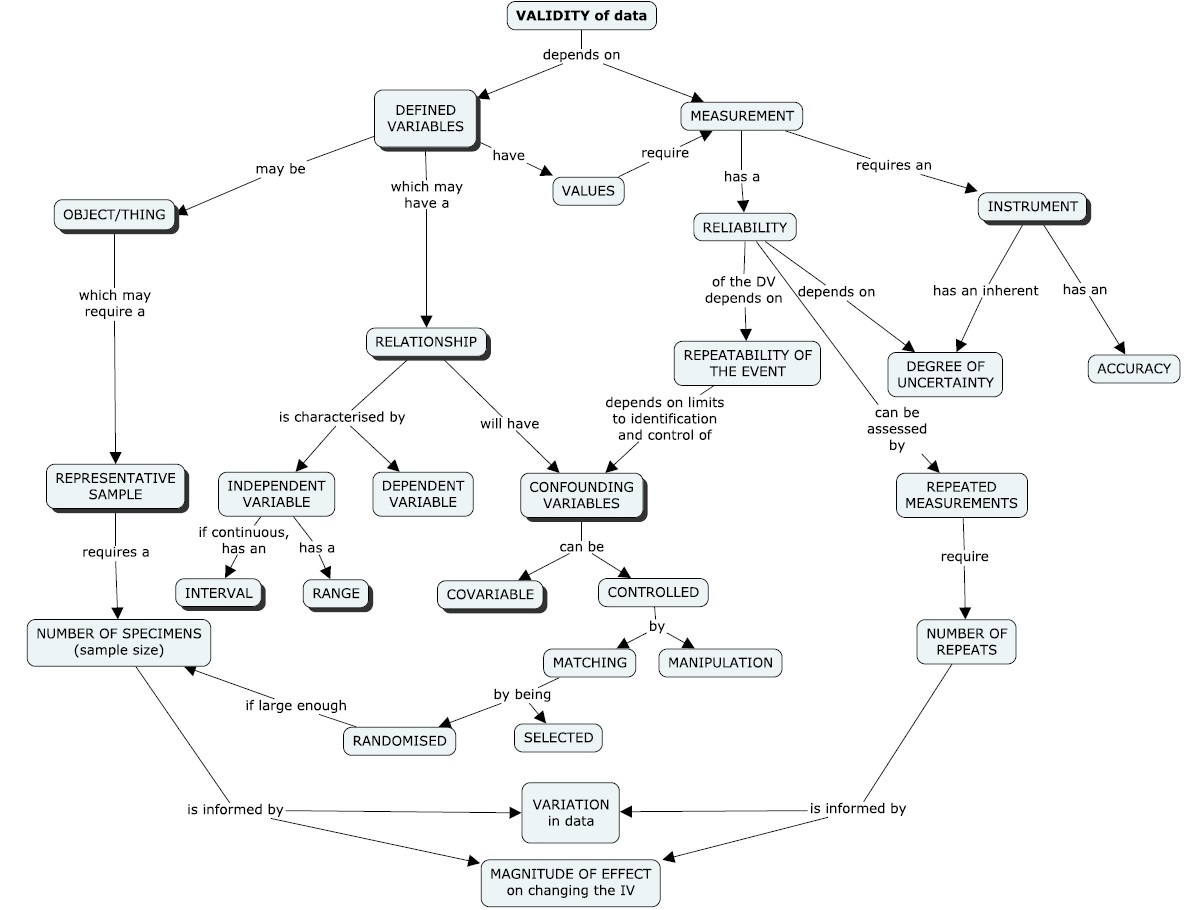

A concept map for ‘the thinking behind the doing’

A concept map with the focus question “What is the ‘thinking behind the doing’ for determining the validity of data?”:

NB: Concepts directly informed by substantive knowledge are highlighted with a shadow on the box.

From: Roberts, R. and Johnson, P. (2015): Understanding the quality of data: a concept map for ‘the thinking behind the doing’ in scientific practice, Curriculum Journal, 26(3), 345-369. DOI: 10.1080/09585176.2015.1044459, where the ideas and their relationships are explained fully and are applied to the decisions made when conducting a lab-based investigation and a fieldwork survey.

Here are some of our most recent publications:

| Roberts, R. (2018) | Biology: the ultimate science for teaching an understanding of scientific evidence. | (pp 225-241) in Challenges in Biology Education Research, Gericke, M. & Grace, M. (Eds). University Printing Office, Karlstad.ISBN 978-91-7063-850-3 |

| Roberts, R. (2017) | Understanding evidence in scientific disciplines: identifying and mapping ‘the thinking behind the doing’ and its importance in curriculum development. | Practice and Evidence of the Scholarship of Teaching and Learning in Higher Education (PESTLHE) Vol 12, No. 2 (2017), 411-4.ISSN 1750-8428 (Special Issue: Threshold Concepts and Conceptual Difficulty; Eds. Ray Land & Julie Rattray). |

| Oshima, R. and Roberts, R. (2017) | Exploring ‘the thinking behind the doing’ in an investigation: students’ understanding of variables. | (Chp 5, pp 69-83) in Jennifer Yeo, Tang Wee Teo, Kok-Sing Tang (Editors) (2017) Science Education Research and Practice in Asia-Pacific and Beyond, Springer, Singapore.ISBN 978-981-10-5148-7. DOI 10.1007/978-981-10-5149-4 |

| Roberts, R. (2016) | Understanding the validity of data: a knowledge-based network underlying research expertise in scientific disciplines. | Higher Education, 72(5), 651-668DOI: 10.1007/s10734-015-9969-4 |

| Johnson. P. and Roberts, R. (2016) | A concept map for understanding ‘working scientifically’. | School Science Review, 97(360), pp. 21-28 |

| Roberts, R. and Johnson, P. (2015) | Understanding the quality of data: a concept map for ‘the thinking behind the doing’ in scientific practice. | Curriculum Journal, 26(3), 345-369. DOI: 10.1080/09585176.2015.1044459. |

| Roberts, R and Reading, C. (2015) | The practical work challenge: incorporating the explicit teaching of evidence in subject content. | School Science Review, 96(357) pp 31- 39. |

| Roberts, R. and Sahin-Pekmez, E. (2012) | Scientific Evidence as Content Knowledge: a replication study with English and Turkish pre-service primary teachers. | European Journal of Teacher Education, 35(1), 91-109. |

| Roberts, R., and Gott, R. (2010) | Questioning the evidence for a claim in a socio-scientific issue: an aspect of scientific literacy. | Research in Science & Technological Education, 28: 3, 203 — 226 |

| Roberts, R., Gott, R. and Glaesser, R. (2010) | Students’ approaches to open-ended science investigation: the importance of substantive and procedural understanding. | Research Papers in Education. 25(4), 377-407 |

| Roberts, R. (2009) | How Science Works (HSW). | Education in Science. June 2009, no 233, 30-31 |

| Roberts, R. (2009) | Can teaching about evidence encourage a creative approach in open-ended investigations? | School Science Review, 90(332) pp31-38 ISSN: 0036-6811 |

| Glaesser, J., Gott, R., Roberts, R. & Cooper, B. (2009) | Underlying success in open-ended investigations in science: using qualitative comparative analysis to identify necessary and sufficient conditions. | Research in Science and Technological Education, 27,1,5-30. |

| Glaesser, J., Gott, R., Roberts, R. & Cooper, B. (2009) | The roles of substantive and procedural understanding in open-ended science investigations: Using fuzzy set Qualitative Comparative Analysis to compare two different tasks | Research in Science Education. 39, 4 (2009), 595-624. |

| Roberts, R. and Gott, R. (2008) | Practical work and the importance of scientific evidence in science curricula. | Education in Science, Nov 2008, 8-9. |

| Gott, R. and Roberts, R. (2008) | Concepts of evidence and their role in open-ended practical investigations and scientific literacy; background to published papers. | Durham, Durham University |

| Gott R. and Duggan, S. (2007) | A framework for practical work in science and scientific literacy through agrumentation | Res. in Sc. and Tech. Educ. 25 (3) |

| Roberts, R and Gott R. (2007) | Questioning the Evidence: research to assess an aspect of scientific literacy. | Proceedings of European Science Education Research Association (ESERA) conference, Malmo, Sweden, August 2007 |

| Roberts, R and Gott R. (2007) | Evidence, investigations and scientific literacy: what are the curriculum implications? | Proceedings of National Association for Research in Science Teaching (NARST) conference, New Orleans, April 2007 |

| Gott R. and Duggan, S. (2006) | Investigations, scientific literacy and evidence | Hatfield |

| Roberts, R and Gott R. (2006) | The role of evidence in the new KS4 National Curriculum and the AQA specifications | School Science Review 87 (321) |

| Roberts, R and Gott R. (2006) | Assessment of performance in practical science and pupil attributes. | Assessment in Education 13 (1) |

| Roberts, R and Gott R. (2004) | A written test for procedural understanding: a way forward for assessment in UK science education | Res. in Sc. and Tech. Educ. 22 (1) |

| Roberts, R. (2004) | Using Different Types of Practical within a Problem-Solving Model of Science. | School Science Review 85 (312) |

| Roberts, R. and Gott, R (2004) | Assessment of Sc1: alternatives to coursework? | School Science Review 85 (313) |

| Gott, R and Duggan, S. (2003) | Understanding and Using Scientific evidence. | Sage, London |

| Gott, R and Duggan S. (2003) | Building success in Sc 1. Workbook and interactive CD ROM | Folens, Bedfordshire. |

| Roberts, R and Gott R (Feb 2003) | Written tests for procedural understanding in science: why? And would they work? | Education in Science, Feb 2003, 16-18. |

| Roberts, R and Gott R (2003) | Assessment of biology investigations. | Jnl. of Biol. Ed. 37, 3, 114-121 |

| Gott R. and Duggan S. (2002) | Performance assessment of practical science in the UK National Curriculum | Cambridge Journal of Education., 32, 2, 183 – 201 |

| Roberts, R and Gott, R.(2002) | Investigations: collecting and using evidence. | In Teaching Scientific Enquiry, ASE/John Murray (Sang D Ed). |

| Duggan S. and Gott R. (2002) | What sort of science do we really need? | Int. J. Sci. Ed. 24, 7, 661-679 |

| Roberts R. 2001 | Procedural understanding in biology: “the thinking behind the doing” | Journal of Biological Education 35 (3) 113-117 |

| Tytler R., Duggan S. and Gott R. 2001 | Public participation in an environmental dispute: implications for science education | Public Understanding of Science 10 343-364 |

| Tytler R., Duggan S. and Gott R. 2001 | Dimensions of evidence, the public understanding of science and science education | Int. J. Sci. Ed., 23, 8, 815-832 |

| Duggan S. and Gott R. 2000 | Intermediate GNVQ science: a missed opportunity? | Research in Science and Technological Education 18 (2) 201-214 |

| Duggan, S. and Gott, R (2000) | Understanding evidence in science: the way to a more relevant curriculum. | In Issues in science teaching. Sears J. and Sorenson P, Routledge, London, pp60-70. |

| Roberts R. and Gott R. 2000 | Procedural understanding in biology: how is it characterised in texts? | School Science Review 82 (298) 83-91 |

| Gott, R, Duggan, S and Roberts, S. (1999) | The science investigation workshop. | Education in Science 183, 26-27 |

| Gott R., Foulds K. and Johnson P. 1997 | Science Investigations Book 1 | Collins Educational |

| Gott R., Foulds K. and Jones M. 1998 | Science Investigations Book 2 | Collins Educational |

| Gott R., Foulds K. and Roberts R. 1999 | Science Investigations Book 3 | Collins Educational |

| Gott R. and Duggan S. 1998 | Understanding scientific evidence – why it matters and how it can be taught. In: ASE Secondary Science Teachers’ Handbook Ed. M. Ratcliffe | Stanley Thornes (Publishers) Ltd |

| Gott R., Duggan S. and Johnson P. 1999 | What do practising applied scientists do and what are the implications for science education? | Research in Science and Technological Education 17 (1) 97-107) |

| Roberts R. and Gott R. 1999 | Procedural understanding: its place in the biology curriculum | School Science Review 81 (294) 19-25 |

Last updated: 11/12/20

To comment on the content of these web pages or for further information,

please contact: [email protected]